Please note that this post, first published over a year ago, may now be out of date.

The beauty of DevOps culture is that implementing it in your company is never only the goal itself, always the journey. There is always something to improve. As the journey progresses, and old problems are solved, new challenges start to arise. Maybe you are already using Infrastructure as Code and CI/CD pipelines to manage your production releases (as I’ve explained in my previous blog post) and it is working fine. That could mean it is time to think about what happens with your work in progress (WIP). In this context, I mean effort you’ve put into software and systems that hasn’t yet been deployed into your production environment where customers can benefit from it.

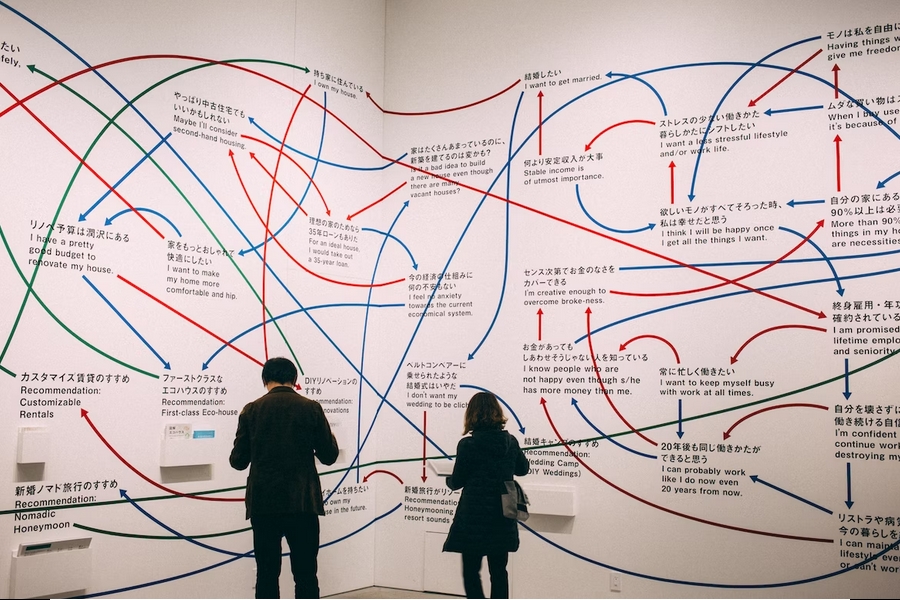

An appropriate approach to environment separation will help you avoid turning your architecture into spaghetti. Photo by Charles Deluvio

“It works for me”

I’m going to tell you a story.

When my friend, let’s call him Victor, asked the other day about how work was going, and I responded that I had fun, he wanted to know why exactly it was that fun. I sent him a screenshot of GitHub Actions finally turning green: I am always happy when the automation I have built starts to finally work and make life easier for everybody - as simple as pushing a button.

Victor asked what exactly CI/CD is, and what purpose it serves. As I could talk about it for hours, I responded that I would explain when we next meet, but for now I could share one of my previous articles about CI/CD to give him an overview. Victor is a data analyst, and I was sure he could benefit from understanding and applying that concept.

After a few hours, I got another message: he actually read it, and now finally understands why his coworker - a developer - is so often frustrated at members of the team that takes care of operations and deployments. “Why is that?” - I wanted to know. “Let me share a part of the message my colleague sent to the whole company, tagging CEO and CTO”, he responded.

The message basically stated that nobody knows why the newest version of an application doesn’t work in the production environment (as well as proposing some time consuming workarounds). The imaginary finger of blame was being pointed at the coders, even though they had no access to production. Nevertheless, the developers knew one thing - the same application worked fine in development. Apparently, there were important differences in how development and production environments were set up. One potential solution the author of the message proposed was to invest development teams’ effort in trying to find a workaround, but also stating that there is a need for constructive feedback regarding the architecture layer from the team responsible for it. I completely understood it - although I didn’t know details about the situation, investing developers’ effort in finding workarounds instead of their regular work sounded like killing a fly with an elephant gun.

“See, our DevOps team is not into automations and such things” - summarised Victor. I wanted to know what they were into, but it was quite unclear.

I am sure I don’t have to convince anybody that level of testing is worth doing, as you don’t want to push potentially breaking changes directly to production. But, as the above case study shows, having just a separate environment is not simply enough. You may have heard of The Twelve-Factor App principles: a set of good practices for applications design, authored by Heroku’s Adam Wiggins around 2011. One of these talks about dev/prod parity - in other words, these environments should be as close to each other as possible, even regarding backing services.

Even if it is tempting to do some cost saving on non-production environment infrastructure, there exists a threat that code working in development environments may fail tests in production. These errors won’t be easy to debug for the developers team, or not possible at all, if they don’t have easy access to that environment. Not only it slows down the progress with releases, but contributes to inefficient usage of resources, as well as diminishing team’s productivity.

Getting into the rabbit hole of endless debugging, instead of focusing on delivering new value can even have negative effects on team motivation. Worst case scenario, frictions may appear between developers and the operations team, as outlined in my friend’s use case. It sounded like the situation already turned out in developers and DevOps team blaming each other, while the application was still not working properly.

In my native Poland, we’ve our own version of a popular meme: “u mnie działa”. It means, roughly, “works on my machine”. My ex-colleagues even bought me a T-shirt with this one-liner as a farewell gift. I’d wanted to focus my career on IT - and it worked. My next role allowed me to understand this one-liner fully - especially in the context of divergent environments.

Endless deployments

Beyond having separate environments, I hope I’ve convinced you that these environments need to be configured similarly. Your developers want to be confident in the changes they are merging to the main branch, and to make sure their work won’t disrupt what was already done. In the end, nobody likes to feel like the cause of an outage (and not many people enjoy troubleshooting a broken live environment). At the same time, work on different functionalities should be kept separate for the sake of velocity and for ease of testing. We also want to merge WIP as soon as possible: branches should be short-lived to catch potential problems early and ensure code quality (according to the phrase “prevention is better than cure”).

So now the question is: how can you provide developers with an easy way to test their work in progress? That should be on top of integration and unit testing - and potentially before their work will be checked by a QA team, if you have one. If people are working on the code independently, and you have only one test environment, there’s going to be a queue and the time inefficiency that comes with it.

On the other hand, you probably want your developers to focus on delivering business value, and not on struggling to spawn new infrastructure or on duplicating each other’s effort. These procedures vary a lot across organisations and sometimes even teams, the more complex and manual they are, the more time people need to spend on familiarising themselves each time and the more likely they are to forget once they did it. If they end up waiting for that one colleague who knows the infrastructure and operations details, the queue you didn’t even want grows even faster. All the time, that person’s other work is getting interrupted.

In the past, I have seen deployments that took weeks due to lack of knowledge, experience, or the availability of people with that know-how. Each undocumented or unexpected discrepancy between different environments just makes the process take even longer. In fact, to illustrate this, I can switch to writing about my own experience. In a previous position I had a developer colleague who always relied on other team members for deployments. Even though the process and instructions were documented, for some reason he was very hesitant about building container images and pushing them to the repository himself. This led to a situation where I and one other colleague were informally “specialising” in deployments, taking the load off from others. This influenced not only interruptions to the normal course of work, but also often meant deployments being blocked for days whenever nobody willing to use Docker was around.

One click deploys

That is where automation comes in handy. What if, instead of relying on other colleagues, each developer could just push the button to deploy all his works to a separate environment, not even worrying about the set up of a proper infrastructure or fighting with unknown technologies? Furthermore, environments would be based on the principle of separating streams of value. As a developer, you will deploy and test changes from your workstream only (we can think of these workstreams as features). That may sound like magic, but as “sufficiently advanced technology is indistinguishable from magic”, it would be just a simple CI/CD automation (see Arthur Clarke’s three laws). This can even substitute for developers fighting to set up a local environment on their own, either issuing PRs to main blindly and counting for good luck when they will give up with the former. The goal is a pipeline that could be easily transferred across the teams and project, due to usage of standardised tools, such as CI/CD pipelines and Infrastructure as Code (e.g. Terraform).

Branch-based environments

Maybe you noticed that even though I have mentioned several problems in the above paragraphs and suggested solutions to them, one was left without a thorough explanation. Namely, I have not discussed how to separate different workstreams from each other when they’re changing the same application. As this concept was defined as the basic unit constituting the environment, let me start with an overview of environment strategies.

Probably the most common solution is to keep resources belonging to all environments in one account. This concept is not the best idea: it does not guarantee separation of concerns and therefore minimising your blast radius in case of, for example, security breach. It also introduces some management overhead with defining permissions (you don’t want the new junior developer to accidentally delete a production database). Therefore, we always suggest to our customers to use different accounts on a given cloud provider platform for development and production (also testing, if you want). For the sake of clarity, these accounts should be placed in the same cloud provider platform - it may seem obvious, but I have seen otherwise as well.

So far, so good. But what if this setup is not enough? For example, imagine you would like to have more environments for each developer to test their workstreams independently and not interfere with each other in the development environment. Maybe your team deploys very often and the development environment is always busy. Maybe you would like to isolate the process of testing two features, which turned out to interact with each other. Or maybe, for some reason, you need scalable ephemeral environments, so you don’t want to commit to usage of specific accounts, or not more than necessary.

For the source code branch is a basic unit of work. It usually separates different functionalities and bug fixes from each other (let’s call these workstreams). There are multiple branching strategies, such as GitFlow or trunk-based development, but this time I will not dive deeper into this topic, as it deserves a separate article. The basic idea of working with version control systems is that having developed a functionality on the specific branch, at some point a pull request is issued to merge this workstream with the main branch. This code should be tested before merging, including unit and integration tests, but sometimes this is not enough and code authors would like to see the code in action. In the end, nobody wants to be guilty of disrupting existing app functionalities, so it is better to make sure before errors would be spotted by the QA team, or, to make things worse, by the end user. Besides, tests during this stage are usually cheaper.

If, as we can see, a branch is a basic unit of development work, and developers would like to see their code functioning before merging or even issuing a pull request, a natural choice would be to set up environments based on those branches. As already mentioned, branches are usually recommended to be short-lived for the sake of faster deployments and better code quality, therefore these environments should be ephemeral. It is possible thanks to CI/CD automations and an appropriate Infrastructure as Code setup.

Uniqueness and separation of environments would be possible thanks to pull request or push event triggers in the CI/CD pipeline. Then, the branch name could be extracted and passed over to Infrastructure as Code as a parameter in order to create isolated resources. If you are using Terraform, you may want to consider using workspaces or Terraform Cloud for easier environment management, but if not, this is also possible. You can try to set up this architecture on your own or ask external cloud consultants for support.

Summary

Even though cutting down the number of running cloud environments may appear as short-term monetary gain due to reduction in infrastructure cost, this may cause frictions and distortions to the deployment process in the long run. Letting developers see their proposed changes running in the cloud - and passing tests - gives people confidence to move fast and not break things. Regardless what environment segregation or branching strategy you will choose, make sure that decision is well-thought and is taking human factors into account. In the end, DevOps culture is about making life easier for everybody, and the procedures should be designed for people - not the other way around.

We offer hands-on AWS training as part of our SaaS Growth subscription, to help your team make the best use of the AWS cloud. Book a free chat to find out more.

For some topics, you can also get the same training just on the topic you need - see our Terraform training and Kubernetes training pages.

This blog is written exclusively by The Scale Factory team. We do not accept external contributions.