Please note that this post, first published over a year ago, may now be out of date.

These days it can seem like we don’t have to wait very long for another data breach involving an insecure S3 bucket. Such data breaches generally involve private data being stored in an S3 bucket which allows public access.

This year alone we’ve seen data breaches from Teletext Holidays, a 3rd party Facebook application, and Lion Air.

Data breaches like these can easily be avoided by following AWS good practices for securing S3 buckets. This includes ensuring that S3 bucket settings block public access.

Introducing s3audit

Today The Scale Factory are releasing [s3audit](https://www.github.com/scalefactory/s3audit), a CLI tool to audit S3 buckets within an account and report on common configuration issues.

We hope that by releasing this tool we can make it easier for teams to check their S3 bucket configurations and avoid them, and their users, suffering a data breach.

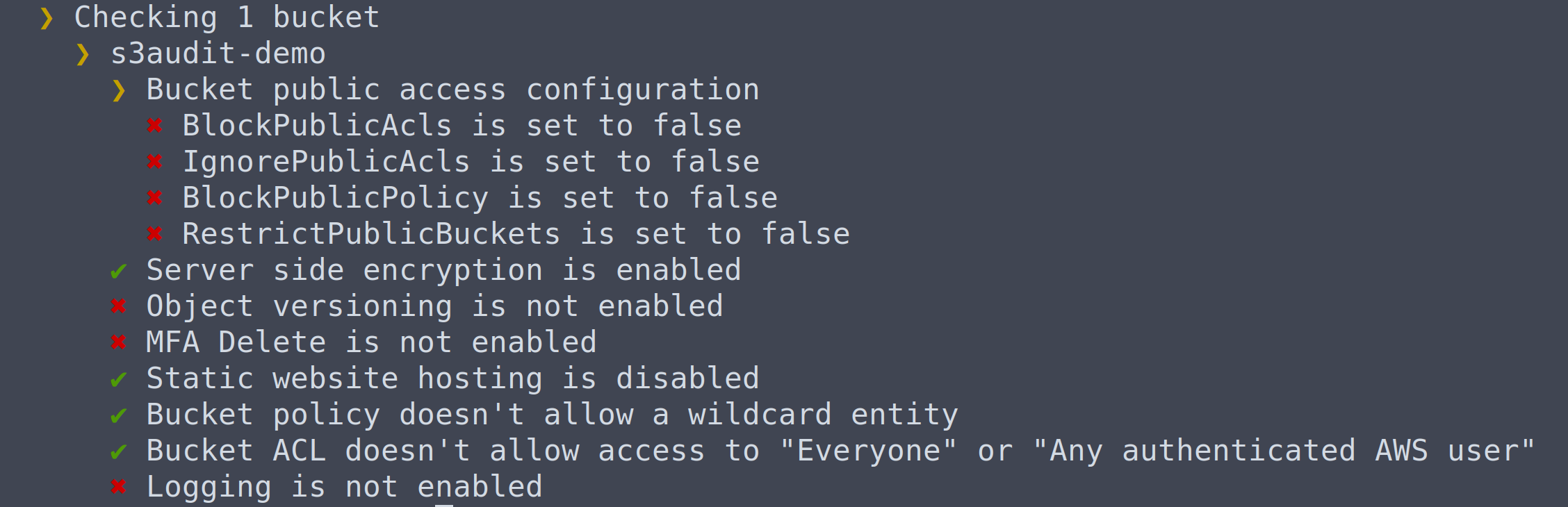

s3audit checks your buckets against a number of good practices to provide clear, actionable insights into the health of your configurations. These checks include:

- Does a public access configuration exist to block public access of the bucket and objects?

- Do bucket ACLs or policies allow public access?

- Is server side encryption enabled by default?

- Is object versioning and MFA delete enabled?

- Is static website hosting disabled?

You can run these checks against all of the buckets in an account, or a single bucket. An example of the output from running s3audit against a single bucket is:

Not of all these checks will be appropriate for all situations. You should review the output and determine which, if any, changes to make.

Get s3audit

You can download the latest release of [s3audit](https://github.com/scalefactory/s3audit/releases) from GitHub, or install it with npm:

$ npm install -g s3audit

s3audit offloads authentication to the AWK SDK so you can continue to use any of the authentication methods that you already use with the AWS CLI. It’s therefore recommended to run s3audit with [aws-vault](https://github.com/99designs/aws-vault):

$ aws-vault exec <profile> -- s3audit

$ aws-vault exec <profile> -- s3audit --bucket=<bucket-name>

Ongoing auditing with AWS Config

Securing your S3 buckets doesn’t end there. To ensure ongoing compliance you should use AWS Config to audit your AWS resources on an ongoing basis.

AWS Config continuously monitors the state of your AWS resources and automatically checks it against defined rules. It can be integrated with CloudWatch, to alert if a change is made which is incompatible with the required state, and Lambda to automatically restore your resources to the desired state.

AWS provide a number of managed AWS Config Managed Rules which can be used to automate the auditing of your S3 buckets on an ongoing basis.

Conclusion

Hopefully this tool will help you quickly gain insights into the current state of your S3 configuration and avoid data breaches. If you need help securing your AWS accounts, get in touch with The Scale Factory.

Check it out at GitHub: scalefactory/s3audit

Designing effective systems security for your SaaS business can feel like a distraction from delivering customer value. Book a security review today.

This blog is written exclusively by The Scale Factory team. We do not accept external contributions.