Please note that this post, first published over a year ago, may now be out of date.

Thinking of using Fargate Spot to save money serving your website? It’s not as simple as it looks.

Before I dive in to why I think that, let’s just recap on some of the technology involved here.

Fargate is serverless container execution. You can drive it via AWS' Elastic Container Service (ECS) - that was actually called EC2 Container Service right until they added Fargate to it, or via Amazon EKS, which is AWS’ managed Kubernetes solution.

Spot pricing is supposed to be all about paying a market price for compute. It’s not actually a market because there’s one seller, AWS, and you pay what they’re offering. The main thing is that the price changes over time and it’s usually a lot lower than on-demand pricing for the same service. It’s cheap because AWS, or whichever provider you’re buying services from, reserves the right to switch it off and sell the same capacity to someone else.

We’ve done a whole load of reviews for our customers’ infrastructure that take the cost of running things into account. Most of the time when people are using EC2 to run their workload, we’re very happy to recommend switching some or even all of it to run using spot pricing. Since December, we’ve also been able to discuss the same switch for Fargate.

In fact, it’s a pretty poor experience. Let’s say you want a base load

of 2 ECS tasks as on-demand, spread across some availability zones.

You want autoscaling to add extra tasks by task tracking a metric,

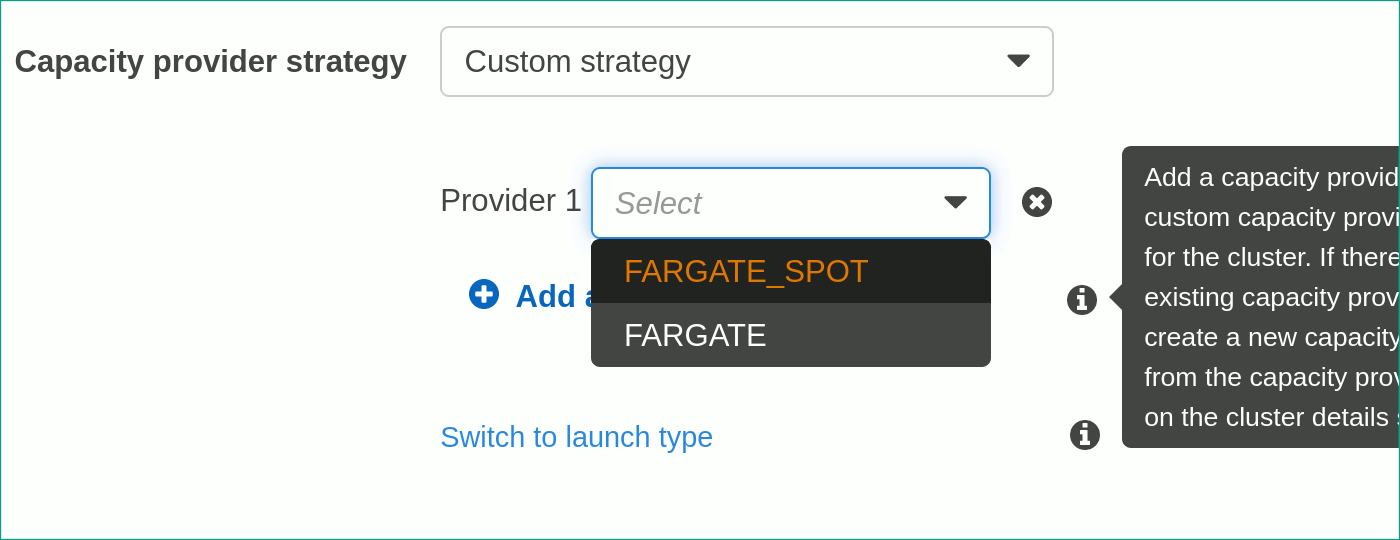

and because you’re cost conscious you favour the FARGATE_SPOT

capacity provider, with a 2:1 ratio of Spot to on-demand tasks. This

isn’t what you actually wanted, but its close enough and easy to

configure.

Depending on the region(s) you’re using, the scale you’re running at, and what the rest of the world is running on their own infrastructure, you might wait anything from just a few minutes to a few days before you see your first interruption event.

Well, actually, that’s my main niggle. You probably will need to handle those task interruption events - more on that later - whereas with EC2 you can usually make a note that the events are available if you want them and get on with something else.

With EC2 Spot, you can set a maximum price you’re willing to pay for a spot instance. On Fargate Spot there’s a fixed on-demand price for a region and a fixed spot price as well, there’s no price history API or anything. What that means is you can’t bid a bit higher in return for fewer terminations.

You might be thinking that you should plan for tasks getting killed, and you’d be right. There’s a review question about that too. No, the problem isn’t that you didn’t plan for tasks getting killed. It’s how the load balancer handles it when they do.

You set up a load balancer, or maybe an API Gateway, to send traffic to

your ECS Service. It’s a website, so you need to distribute those

requests across available tasks. After the task state change event comes

in, the task’s containers get a 30 second grace period (you can configure

it up to 2 minutes). What actually happens is that ECS sends a SIGTERM

to PID 1 in each container. If your app is like most webservers, it takes

the hint and stops. Meanwhile, your load balancer is happily sending

traffic to a now-stopped task.

Eventually, the health checks kick in and the load balancer deregisters the target. This is what makes me wince. With interruptions happening several times per hour or more, what might have felt like a straightforward cost-saving trick turns into a good way to blow your error budget. In my mind, your customers seeing a 500 error because Spot is reclaiming capacity is unacceptable, particularly as Fargate itself registers the task with the target group in the first place.

AWS is happy to give you all the ingredients to make Fargate Spot work for you. Set up EventBridge to run a lambda that deregisters the target. Or teach your app to hold off shutting down, failing its health checks deliberately so that the load balancer stops sending traffic.

It’s all there. It’s also all work that you have to do, and the effort it takes often doesn’t pay off. Bear in mind that if you did the sensible thing and used an existing webserver container or webserver library, it probably doesn’t have that special termination event behaviour built in. Why would it?

Spot tasks on Fargate have their uses. Feel free to use this for your test environment, where the extra errors keep things interesting. Any batch tasks that you can restart at will, like rendering single frames of an animated video, are a great fit. If you can guarantee that all the clients of your API cope with errors and retry properly, you can happily switch to spot for that too.

For a typical webapp that you serve to visitors and needs to Just Work pretty much all the time, I really wouldn’t. Host it on EC2 instead with spot as part of the fleet, or use standard Fargate and buy a savings plan. You’ll be up and running much quicker, and you’ll stay up.

Ready to discover what your AWS environment might be hiding? Book a complimentary AWS health check to get a clear picture of your cloud spending and uncover immediate cost optimisation opportunities.

For qualifying customers, we generally recommend our ongoing Cloud Cost Optimisation service that includes regular cost reviews at no additional cost. It’s how we help businesses maintain control of their cloud costs long-term, and it might just save you thousands.

This blog is written exclusively by The Scale Factory team. We do not accept external contributions.