Please note that this post, first published over a year ago, may now be out of date.

A common strategy when using CodePipeline is to upload files to an S3 bucket as part of the deployment process.

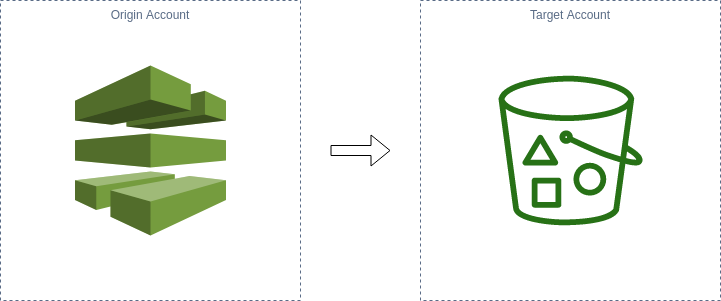

This works great for a simple pipeline where the pipeline and the S3 bucket exist in the same account. But imagine a more complicated situation where you want to deploy artifacts to another AWS account. Such a situation can easily arise if you follow AWS good practice and use different AWS accounts for separation of concerns.

You may be tempted to think that this would be easy. After all, S3 cross-account access is easy isn’t it? Setup cross-account access for your CodePipeline role to the S3 bucket and you’re done, right? Unfortunately, wrong. A little understood fact about cross-account access with S3 is that when an IAM entity in one account uploads an object to a bucket in another account, the object is still owned by the uploading account.

This can cause issues later down the line when you come to access the object. In particular, you may want to use the files uploaded to the S3 bucket in a pipeline in another account. This can be useful for promoting artifacts between environments. Unfortunately though, this won’t work. Because the objects in S3 are owned by the origin account, a CodePipeline pipeline in the same account as the S3 bucket won’t be able to use the objects as an artifact.

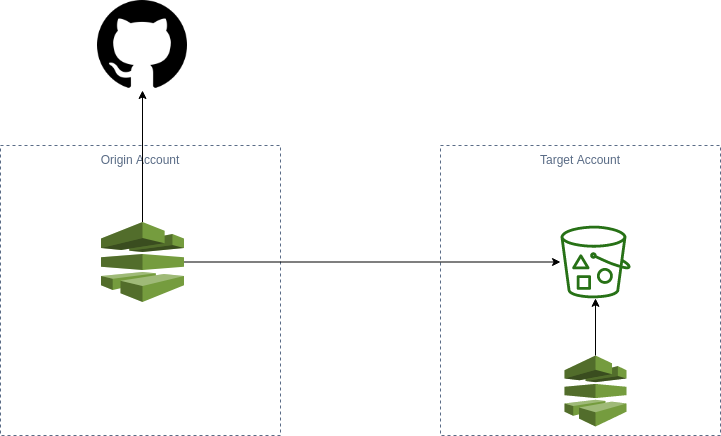

The solution to this is to create an IAM role in the account with the S3 bucket (target account). This role has the required permissions to upload objects to the S3 bucket. The CodeDeploy action in another account (origin account) would then assume this role.

We’ve created a reference module for Terraform which is available on GitHub demonstrating how to set up this pipeline. This solution creates the necessary S3 buckets, IAM roles, KMS keys, and CodePipeline to implement a cross-account deployment.

Without modification this reference module will create a simple CodePipeline pipeline which is triggered by a commit to a GitHub repository and uploads the files from GitHub to S3. That’s not very useful, but should serve as a useful starting point for your own solution.

You can check out an example here: scalefactory/terraform-codepipeline-crossaccount-example

We’ve been an AWS SaaS Services Competency Partner since 2020. If you’re building SaaS on AWS, why not book a free health check to find out what the 2023 SaaS SI Partner of the Year can do for you?

This blog is written exclusively by The Scale Factory team. We do not accept external contributions.