Please note that this post, first published over a year ago, may now be out of date.

It’s 2019 when I’m writing this, and I’m still a big fan of command line tools.

I’m going to run through some patterns for command line tools that let you leave room for future work, and explain what makes these so powerful.

My first encounter with the command line was in the 1980s. Schools could, and did, afford to put in enough computers to use them in teaching. People could buy a home computer without needing to sell your home first. You turned the thing on and it would wait for you to type something in to tell it what to do.

Command lines are great for quick, ad hoc automation. You can combine a few existing components to process some data or achieve a task. This isn’t just faster than writing your own tool to do all of that. If you frequently work at the terminal, improvising a pipeline out of existing tools is often quicker than finding a tool that’s dedicated to the you have in mind and making use of it. People who use the command line a lot talk about having muscle memory not just for keystrokes but for whole commands.

The first way to build on that muscle memory is to use subcommands. For example, on my laptop I have systemctl as the main way to interact with the init process and its affiliated tooling. This is kind of handy as there are 64 different subcommands. I don’t remember all of them and there are some I don’t think I’ve ever tried.

What subcommands get you is command completion. Let’s say I want to see all the timer units that are running. What I’ll do is type systemctl, hit Tab on my keyboard, and search for a likely-looking subcommand. Pretty soon I’ve found systemctl list-timers –all, and I’m done. This saved me having to find or remember some command with a more obscure name like timerctl or atq.

If you’re on the authoring side, making life easy for your users means more work for you — that’s often the way with coding. You have to implement a completion helper for each different kind of shell (eg bash, zsh, fish) and you need to give end users some way to turn that completion on. The more things that your command can do, the more that your users will thank you.

To make subcommands that help your users, think about structure. Tools with just one main command, like echo, usually represent an action (verb), followed by some items (nouns) to act upon.With subcommands, the last thing before the parameters should also be a verb, eg:

- git stash pop

- aws sts get-caller-identity

If you’ve used PowerShell, you might notice that AWS’ commands look a lot like the verb-noun structure of PowerShell commands. Verb-noun names are a nice idea, but they’re a poor fit for completion: try loading a few modules, type “Get-” and hit Tab. You’ll be overwhelmed with possibilities.

Structured subcommands let you limit the scope of the next completion to something manageable. (As an example, there are over 5000 verb-noun type commands for the AWS CLI tool; with new services that’s only going to get bigger). You don’t need to load a module or install a new tool when you want to use a new kind of subcommand: it’s ready for use straight away.

kubectl, the Kubernetes CLI tool, adds another approach. Kubernetes is designed as a platform for making your own platform, so you might have custom resources or other extensions to your cluster. People who work on that cluster will want to use standard kubectl; they won’t be keen on installing a different management tool for each cluster they work with.

kubectl has a fairly small set of key verbs: apply, get, delete, describe, alongside others that have specific uses (eg exec). You can use get, apply, or delete to manage different kinds of resource — and you get tab completion too. If you deploy a new ClusterThingy resource, you can kubectl get ClusterThingy without looking up which subcommands work for that resource.

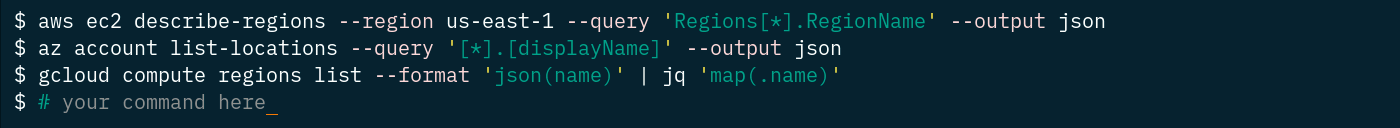

So far, I’ve explained two kinds of extensibility for CLI utilities. The big cloud providers all give you a command line tool where they can add subcommands that follow an existing pattern. The Kubernetes tool kubectl is ready for your cluster to support custom resources its coders hadn’t even thought of. Next, I’ll explain how tools can let users extend them even further.

Tools like cargo, git and kubectl support third-party subcommands. They’re designed so you can add behaviour via new subcommands, without needing to touch the tool itself. For example, I can run git show-merged-branches to list all of the branches I have locally that already got merged. To make that work, I wrote a three-line script, named it git-show-merged-branches, and put it in my PATH.

This way, adding your own subcommand really can be that simple:

- Write the code that implements your new feature.

- Save it to a file in your PATH named for the command and subcommand.

- There is no step 3!

If you’re someone writing a tool for other command-line aficionados to use, enjoy and extend, you can add this kind of extensibility to your tool. It’s the kind of change that turns users into fans.

The command you ship to users keeps the same name it’s always had: if I run kubectl plugin list, the shell looks for a command named kubectl and runs it with an array of arguments. This means the top-level tool has to discover any subcommands for itself.

Say I run kubectl scan images. That’s not a kubectl feature, so kubectl searches every folder in your PATH. First it looks for kubectl-scan-images, then if it doesn’t find that it looks for kubectl-scan and runs it with images as an argument. Making this kind of lookup happen is work for the tool author that pays off when power users write, add and share their extensions.

You might be wondering what happens if a subcommand contains a “-” in its name, like show-merged-branches from earlier. There are conventions on how to handle this; however, each tool seems to use its own convention. If you’re writing an extension, check the documentation for the tool you’re extending. If you’re making a tool extensible, copy an existing convention that suits you.

Earlier, I mentioned shell completion. If you’re the author of a tool that supports subcommands, and you have a shell completer helper, you’ll need to make that completion helper understand the subcommands that users have added. That way, you’re letting end users for the command line tool make use of the same discovery mechanism whether the subcommand is built-in or not.

To summarise:

- For tools that do many related things, using a subcommand structure lets you organise and group different features. Taking a consistent approach reduces the mental burden on end users.

- Shell completion helps users discover command-line tools features and behaviours. The more commands or options that a tool has, the more useful it is to have completion in place.

- You can let users add their own / third-party subcommands, without them needing to download the source code for the main tool or to learn about its development process. Lowering this barrier to entry means that end users can comfortably add extensions to fill gaps and meet their own needs.

We’ve been an AWS SaaS Services Competency Partner since 2020. If you’re building SaaS on AWS, why not book a free health check to find out what the SaaS SI Partner of the Year can do for you?

This blog is written exclusively by The Scale Factory team. We do not accept external contributions.