Please note that this post, first published over a year ago, may now be out of date.

Is paging a good fit for today’s world of cloud-native code and utility infrastructure? Notable projects, including Kubernetes, recommend that you run with swap disabled. I’m going to explain what I believe and why.

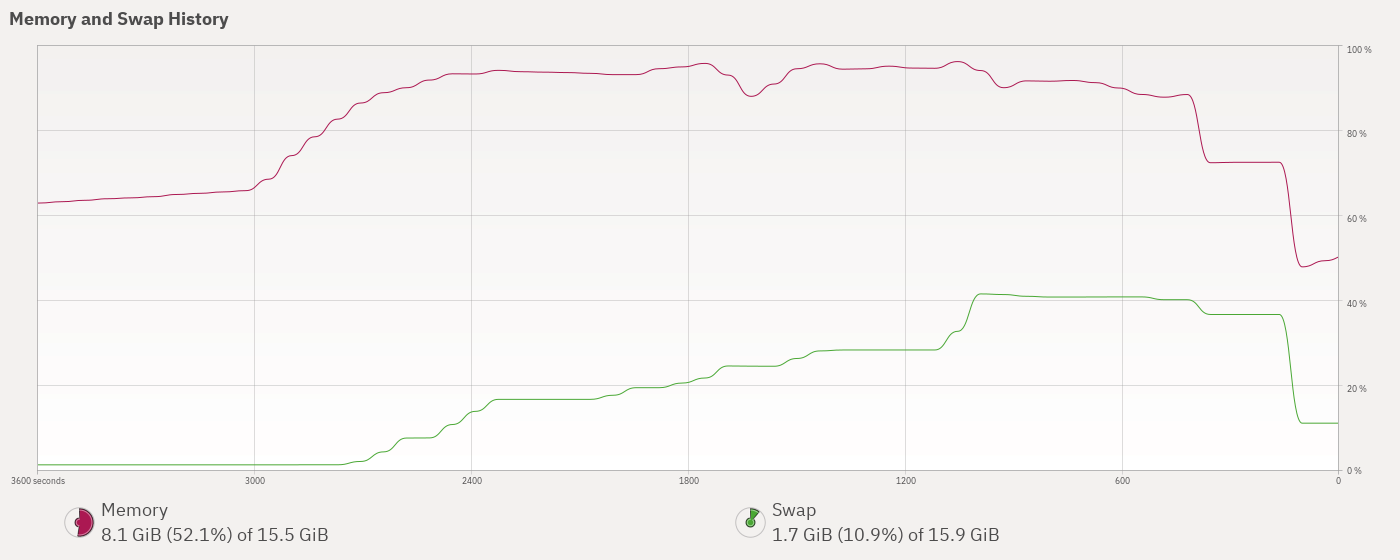

Graph showing memory and swap history

Since people first started using computers for business, they’ve found themselves short on resources at some times and with a surplus of resources at others. You can use virtual memory techniques, like paging, to let a program make use of more memory than the computer has fitted.

Because some of the jargon isn’t always clear, I’ll define some terms first:

“Virtual memory” is, in truth, the only kind of addressing in mainstream operating systems: userland threads see a virtual address space, while the OS takes care of mapping that to the address space of actual RAM.

“Paging” means updating the mapping for a block of memory. For example, a process has asked the kernel for a new blank memory page. As soon as a thread tries to read or write there, the operating system steps in and makes sure that there really is a page of blank memory at just the right place.

Paging is also how the system deals with memory pressure. Let’s say the thread read through all those zeroes and never wrote there. The operating system was smart enough to track this and knows that it’s still a blank page. If some other thread needs the physical memory for something more important, the OS can unmap the page from the first thread and hand it to the new thread.

“Swap” is where the operating system can put dirty pages that don’t have a better home. On Linux, this means process memory, anonymous shared pages, and tmpfs data. Some kinds of page don’t need to go to swap. If a process uses mmap() to access a file then the kernel will flush those data to the filesystem. Clean pages don’t need to be written anywhere, because they didn’t change.

Okay, so those are the terms I’m talking about. What’s the big deal about turning swap on?

First off, you get more memory. At the cost of slower accesses, your system can store data pages that aren’t getting used, and let the kernel shunt those off to swap. That memory’s now available for caching block device data (that is, local files) or for applications.

On modern systems, fetching something back in from swap is fairly fast — maybe 1ms for cloud storage, much less for local SSD. It wasn’t always that way, though. Only a few years ago it was common to use spinning hard disks for swap, and those are a lot slower, maybe 20ms. That’s up to a million times slower than the few nanoseconds it takes to access RAM.

(If you want to see how these figures have changed over time, check out Colin Scott’s nifty visualisation).

Say you’re running an app on a system with old-style hard disks. Because the system’s been busy and the app was in the background, the operating system paged out some rarely-touched bits of code and found better uses for that physical memory. Only now you come back and the app wants to run that code.

Fetching that memory back in could easily take a second or longer if you’d been unlucky. Once it’s in the computer’s RAM the accesses are quick and your app starts to look sprightly again. The lag was annoying. On a busy webserver, the extra load might let a backlog build. A once-a-day background task that briefly locks the app might hurt performance for minutes.

Maybe you’ve seen that behaviour yourself and tried different ways to fix it. I’ve needed to deal with these kind of symptoms myself, and I’ll run through some of the ways I know about.

- Scale horizontally (add more instances / servers / pods and run the app across these in parallel). This works great if it’s an option. On the other hand, you might add one server and only change from an outage affecting ⅓ of end users to an outage that affects ¼ of them. Hardware isn’t always the answer.

- Scale vertically (assign more memory / run on bigger instances). The more RAM you have, the more chance there is that unused pages stay resident.

- Tweak the app’s runtime environment. You can set swappiness (yes, it’s a thing) per cgroup, so that the app’s memory gets paged out last. Privileged apps can use mlock() or mlockall() to keep important pages in memory; I’ve also used memlockd on resource-constrained servers for the same kind of effect.

- Add more swap. The kernel pages out memory, including application code, when it has a better use for the physical RAM page. With no swap device, or not enough, the kernel always has to keep application data in physical memory as there’s no other place for it to go. If the kernel can use swap space it has more choices about what to evict.

- Update the app. When a low priority thread can hold a lock that blocks more important threads, in effect preempting them, that’s not helpful. Is it possible to rewrite the system to skip that locking, or to isolate the background task from the other threads? Maybe instead you can separate the background task to a scheduled function, cron job, or similar.

You’ve probably noticed that these come down to different flavours of either fixing the app or providing more memory. I didn’t try disabling swap.

On a system under memory pressure, paging will still happen even if you have no swap space. The kernel will have to select program code and other read-only pages in memory, and discard them to get back space. With that in mind, I don’t think reducing / disabling swap is the right fix there.

Maybe you’re worried how swap affects data confidentiality: if your app has data in memory, and those data get paged out, that creates a more durable record of those data. You might have secret keys and worry about them going onto disk, and that’s fair enough.

I’d always recommend that you encrypt your swap space, using ephemeral keys that are only ever held in RAM. Apps can mlock() sensitive pages so that these aren’t written to swap, regardless of system settings. If you really don’t want sensitive information written to swap, even encrypted, then you’re going to have to run without swap and make sure you have enough memory.

There’s a theory that having swap enabled means that there’ll be more writes to storage and the system will slow down bringing back pages from disk. I don’t really agree with this. If the system’s not under memory pressure, the most important data can live in memory. If the system is under memory pressure, something will have to get evicted to make room, and when that something is needed again, the system will have to fetch it back.

Swap space gives the kernel more options to use the finite amount of real RAM. Sharper minds than mine have tuned the way the kernel makes these choices. For most cases, I’ll run workloads with swap enabled, and trust those sharp minds.

We’ve been an AWS SaaS Services Competency Partner since 2020. If you’re building SaaS on AWS, why not book a free health check to find out what the SaaS SI Partner of the Year can do for you?

This blog is written exclusively by The Scale Factory team. We do not accept external contributions.